Math Cracks – What is a Derivative, Really?

It seemed important to me to go over the concept of derivative of a function. The process of differentiation (this is, calculating derivatives) is one of the most fundamental operations in Calculus and even in math. In this Math Crack tutorial I will try to shed some light into the meaning and interpretation of what a derivative is and does.

First of all, for the purpose of clarifying what is the scope of this tutorial, I would like to say that we won't be practicing with solving specific practice problems involving derivatives but we will rather make an attempt to understand what we are doing when operating with derivatives. Once we understand what we are doing, we have a WAYYY better chance to solve problems.

DEFINITION OF A DERIVATIVE (NOT THE BORING ONE)

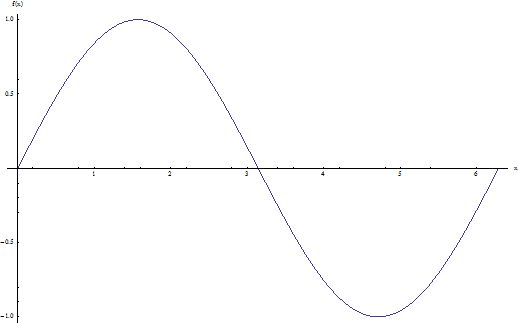

To get started, it is mandatory to write at least the definition of a derivative. Assume that \(f\) is a function and \({{x}_{0}}\in dom\left( f \right) \). Ok, we started with technicalities already? All we are saying is that \(f\) is a function. Think of a function \(f\) by its graphical representation shown below:

Also, when we say that "\({{x}_{0}}\in dom\left( f \right) \)", all we are saying is that \({{x}_{0}}\) is a point where the function is well defined (so it belongs to its domain ). But hold it, is it possible for a point \({{x}_{0}}\) to make a function NOT well defined….? Certainly! Consider the following function:

\[f\left( x \right)=\frac{1}{x-1}\]

Such function is NOT well defined at \({{x}_{0}}=1\). What is it not well defined at \({{x}_{0}}=1\)? Because if we plug the value of \({{x}_{0}}=1\) in the function we get

\[f\left( 1 \right)=\frac{1}{1-1}=\frac{1}{0}\]

which is an INVALID operation (as you know from primary school, you cannot divide by zero, at least with the traditional arithmetic rules), so then the function is not well defined at \({{x}_{0}}=1\). For a function to be well defined at a point means simply that the function can be evaluated at that point, without the existence of any invalid operations.

So now we can say it again, because now you know what we mean: Assume \(f\) is a function and \({{x}_{0}}\in dom\left( f \right) \). The derivative at the point \({{x}_{0}}\) is defined as

\[f'\left( {{x}_{0}} \right)=\lim_{x \to {x_0}}\,\frac{f\left( x \right)-f\left( {{x}_{0}} \right)}{x-{{x}_{0}}}\]

when such limit exists.

Ok, that is the meat of the problem, and we will discuss it in a second. I would like to you to have some things EXTREMELY clear here:

• When the above limit exists, we call if \(f'\left( {{x}_{0}} \right) \), and it is referred as the “derivative of the function \(f\left( x \right) \) at the point \({{x}_{0}}\)”. So then, \(f'\left( {{x}_{0}} \right) \) is simply a symbol that we use to refer to the derivative of the function \(f\left( x \right) \) at the point \({{x}_{0}}\) (when it exists). We could have used any other symbol, such as “\(deriv{{\left( f \right)}_{{{x}_{0}}}}\)” or “\(derivative\_f\_{{x}_{0}}\)”. But some aesthetic sense makes us prefer “\(f'\left( {{x}_{0}} \right) \)”.

The point is that is a MADE UP symbol to REFER to the derivative of the function \(f\left( x \right) \) at the point \({{x}_{0}}\). The funny thing in Math is that notation matters. Even though a concept exists regardless of the notation used to express it, a logical, flexible, compact notation can make things catch on fire as opposed to what can happen with a cumbersome, uninspired notation

The Role Played by Notation

(Historically, the two simultaneous developers of a usable version of the concept of derivative, Leibniz and Newton used radically different notations. Newton used \(\dot{y}\), whereas Leibniz used \(\frac{dy}{dx}\). Leibniz notation caught on fire and facilitated the full development of Calculus, whereas Newton’s notation caused more than one headache. Really, it was that important).

• The derivative is a POINTWISE operation. This means that it is an operation done to a function at a given point, and it needs to be verified point by point. Of course a typical domain like the real line \(\mathbb{R}\) there is an infinite number of points, so it may take a while to check by hand if a derivative is defined at each point. BUT, there are some rules that allow to greatly simplify the work by computing the derivative at one generic point \({{x}_{0}}\) and then analyzing for which values of \({{x}_{0}}\) the limit the defines the derivative exists. So you can relax, because the gritty handwork won’t be to taxing, if you know what you’re doing of course.

• When the derivative of a function \(f\) exists at a point \({{x}_{0}}\), we say that the function is differentiable at \({{x}_{0}}\). Also, we can say that a function is differentiable at a REGION (a region is a set of points) if the function is differentiable at EACH point of that region. So then, even though the concept of derivative is a pointwise concept (defined at a specific point), it can be understood as a global concept when it is defined for each point in a region.

• If we define \(D\) the set of all points in the real line where the derivative of a function is defined, we can define the derivative function \(f'\) as follows:

\[\begin{aligned} & f':D\subseteq \mathbb{R}\to \mathbb{R} \\ & x\mapsto f'\left( x \right) \\ \end{aligned}\]This is a function because we uniquely associate each \(x\) on \(D\) with the value \(f'\left( x \right) \). This means that each value of \(x\) on \(D\) is associated with the value \(f'\left( x \right) \). The set of all pairs \(\left( x,f'\left( x \right) \right) \), for \(x\in D\) form a function, and you can do all the things you can do with functions, such as graphing them.

That should settle the question that many students have about derivatives, as they wonder how we have a derivative “function”, when the derivative is something that is computed at a certain specific point. Well, the answer is that we compute the derivative at many points, which provides the foundation to define the derivative as a function.

Final Words: Notation Hell

When the concept of derivative was put into the modern form we know by Newton and Leibniz (I make the emphasis on the term “modern form”, since Calculus was almost fully developed by the Greeks and others in a more intuitive and less formal way a LONG time ago), they chose radically different notations. Newton chose \(\overset{\bullet }{\mathop{y}}\,\), whereas Leibniz chose \(\frac{dy}{dx}\). So far so good. But the concept of derivative means much less if we don’t have powerful derivative theorems.

Using their respective notations, they both had little trouble to prove basic differentiation theorems, such as linearity and the product rule, but Newton didn’t see the need for formally stating the Chain Rule, possibly because his notation didn’t lend itself for that, whereas for Leibniz notation, the Chain Rule shows itself almost like a “Duh” rule. To be more precise, assume that \(y=y\left( x \right) \) is a function and \(u=u\left( x \right) \) is another function.

It is a natural question to ask if I can compute the derivative of the composition \(y\left( u\left( x \right) \right) \) in an easy way, based on the derivatives of \(y\) and \(u\). The answer to this question is the Chain Rule. Using Leibniz notation the rule is

\[\frac{dy}{dx}=\frac{dy}{du}\frac{du}{dx}\]

It is almost as if you could cancel the \(du\)’s like:

\[\frac{dy}{dx}=\frac{dy}{\cancel{du}}\frac{\cancel{du}}{dx}\]but it is not exactly like that. But that is the beauty of Leibniz notation. It has a strongly intuitive appeal (and the “canceling” \(du\)’s are almost at a reality, it is only that that it is done at the \(\Delta u\) level and there are limits involved), but yet you need to understand what Leibniz was saying with the rule. He says:

“The derivative of the compound function \(y\left( u\left( x \right) \right) \) is the same as the derivative of \(y\) at the point \(u\left( x \right) \) multiplied by the derivative of \(u\) at the point \(x\)”

The Chain Rule using Newton’s notation gets the following form:

\[\overset{\bullet }{\mathop{\left( f\circ u \right)}}\,=\overset{\bullet }{\mathop{f}}\,\left( u\left( x \right) \right)\overset{\bullet }{\mathop{u}}\,\left( x \right)\]

Quite a bit less pretty, isn’t it? But guess what, Newton’s Chain Rule says EXACTLY THE SAME as

\[\frac{dy}{dx}=\frac{dy}{du}\frac{du}{dx}\]

Though, this latter notation caught on fire and helped enormously to the fast development of modern Calculus, whereas Newton’s form was way less beloved. Even though the theorems were saying exactly the same, one was golden and the other one not so much. Why? NOTATION my friend.